I've been meaning to start writing here for months. Kept putting it off. Then I went through Interinstitutional File: 2022/0155(COD). Sometimes a piece of legislation is so absurd it breaks through your writer's block. Specifically, section 12A.

The European Union's proposal, officially titled "Regulation to Prevent and Combat Child Sexual Abuse" would mandate client-side scanning of all private communications, breaking encryption for 450 million citizens. Every. Single. One. Your texts to your spouse. Your teenager's Instagram DMs. That embarrassing medical photo you sent your doctor. All of it, scanned by AI that's wrong 80% of the time. For child safety, they say. Meanwhile, Section 12A specifically excludes "electronic communications services that are not publicly available", meaning classified government systems like Sectra Tiger/R stay untouched.

Now look, I'm not saying government classified systems shouldn't be secure. But they're creating a two-tier system where certain communications get protection while yours don't. The principle bothers me, even if the technical scope is narrower than it first appears.

But it gets worse. The exemptions aren't just for classified systems—they explicitly carve out 'professional accounts of staff of intelligence agencies, police and military.' As Pirate Party MEP Patrick Breyer put it: 'The fact that the EU interior ministers want to exempt police officers, soldiers, intelligence officers and even themselves from chat control scanning proves they know exactly just how unreliable and dangerous the snooping algorithms are that they want to unleash on us citizens.'

Think about that for a second. If the scanning is reliable enough to trust with detecting actual crimes against children, why do intelligence agencies need protection from those same algorithms? Either the technology is trustworthy enough for detecting serious crimes, or it isn't.

The Democracy Problem Nobody Wants to Discuss

Plato argued democracy rewards good messaging over good policy. Watch how it works: unelected Commission officials propose mass surveillance, frame it as 'child protection,' and suddenly elected politicians can't oppose it without looking like they don't care about kids. The Parliament that's supposed to check them faces impossible political pressure—who votes against 'protecting children'?

About Those Statistics

Let’s address some of the numbers they're using to justify this.

"One in five children experiences some form of sexual violence before age 18"—this comes from the Council of Europe and includes non-contact abuse. It's real, it's horrifying, but it doesn't automatically mean mass surveillance is the solution.

The 59% hosting statistic? Yes, the Internet Watch Foundation found that 59% of detected CSAM was hosted on EU servers in 2022, with 32% in the Netherlands alone. But this number rather reflects where criminals rent server space, not where abuse happens. The Netherlands has that high percentage because Amsterdam has some of the world's best internet infrastructure—exceptional speeds, low costs, reliable uptime. Criminals host where the servers are fast and cheap. It's that simple.

The IWF's own data shows this—Switzerland jumped from 1% to 8% because of two websites. Poland saw an 8,000% increase mostly due to one problem site. This massive statistical jump doesn't mean that Poland suddenly became a hotbed of abuse or Polish law enforcement failed. Instead, it means that one bad actor chose to host their illegal operation in Poland. It’s not a surveillance failure, but an infrastructure exploitation pattern.

The Emotional Manipulation Is Deliberate

The "protecting children" framing isn't accidental. Once you hear it, your brain's risk assessment goes haywire—neuroscientists have actually mapped this response. You stop asking practical questions like "will this even work?" and start feeling like opposition makes you complicit.

I noticed this in myself when I first read the proposal. My immediate thought was "well, if it helps kids..." and “I don’t have anything to hide” before I caught myself and actually went through some of the details.

Even Their Own Legal Experts Think This Is Insane

The opposition isn't coming from privacy extremists or tech libertarians. The EU's own Legal Service warned it violates fundamental rights(specifically Articles 7 and 8, which protect the right to private communications and personal data).

The European Data Protection Board expressed "serious concerns". Former ECJ Judge Ninon Colneric called it a violation of "fundamental rights of all EU citizens". Even the UN Committee on Rights of the Child said digital surveillance shouldn't be "routine or indiscriminate".

When your own legal team is telling you something's wrong, maybe... just maybe... something's wrong?

The Governments That Failed to Protect Now Want to Surveil

The institutions demanding access to your private messages have a documented history of failing to protect children in their own care—or worse.

Belgium's Dutroux affair exposed police ignoring his own mother's tip that he was holding girls in his house. When the judge was dismissed for attending a victims' fundraising dinner, 300,000 Belgians marched against the cover-up.

Britain's Westminster investigation confirmed MPs were "known to be active in their sexual interest in children and were protected from prosecution." They got knighthoods instead. A dossier on Westminster pedophile networks? Mysteriously disappeared from Home Office files, along with 113 related documents.

Germany ran the Kentler Experiment—Berlin authorities deliberately placed homeless teenagers with known pedophiles as foster parents. This wasn't a failure of oversight. It was official policy, funded by the Berlin Senate, running from 1969 into the 2000s.

The pattern is consistent: protection over prosecution, missing documentation, delayed investigations. The very institutions that couldn't protect children in their own buildings now claim they need to read everyone's messages to protect children everywhere else.

Maybe fix your own houses first?

Technical Theater

The technical foundations of Chat Control are as flawed as its legal ones. Client-side scanning fundamentally breaks encryption by analyzing content before it is encrypted, creating systematic vulnerabilities. These vulnerabilities, in turn, open new attack surfaces for state-sponsored attacks, criminal exploitation, ransomware deployment, foreign intelligence access, and authoritarian governments worldwide.

The implementation would require installing scanning algorithms directly on user devices to analyze messages, images, videos, and URLs in real-time before automatic reporting to authorities. But sophisticated criminals won't be deterred—they can simply encrypt content locally, employ steganography to hide illegal material within innocent images, or migrate to alternative platforms entirely.

Meanwhile, false positive rates would overwhelm law enforcement. Swiss authorities report 80% of machine-generated reports are false positives, while even theoretical 99% accuracy would generate millions of daily false reports. The human review required would be completely unfeasible at scale.

These technical flaws won't stop implementation—they'll accelerate it. Because for those who want surveillance infrastructure, a broken system that generates millions of false positives is actually better than one that works. It justifies expanding review powers, hiring more analysts, and building more infrastructure to 'fix' the problems. The failure becomes the reason for more control, not less.

The Authoritarian Playbook, European Edition

Every authoritarian surveillance system needs its excuse. Russia used "terrorism". China went with "social harmony". Similar justifications had Iran with its "public order and morality", "anti-terrorism" and Myanmar. Ask the Uyghurs, Iranian women's rights activists, Russian anti-war/anti-government protesters, or Myanmar's democracy movement how that worked out.

The pattern holds: find the thing nobody can publicly oppose, then build your surveillance apparatus around it.

Europe's version is more sophisticated—we get to feel like we participated through democratic processes, signed our petitions, voiced our concerns. We get the illusion of resistance while the machinery grinds forward.

The technical reality is that once this infrastructure exists, it's there forever. New governments, new uses, new definitions of what's "harmful." The scanners looking for CSAM today become the scanners looking for "extremist content" tomorrow, "misinformation" next week, and whatever else sounds scary enough to justify their existence.

What Actually Works

Here's what drives me crazy—we already know what works against CSAM.

The Netherlands reduced its CSAM hosting from 41% to 29% between 2021 and 2024 through targeted pressure on hosting providers. No surveillance expansion needed. Just old-fashioned "fix your platform or face consequences" enforcement. INHOPE data shows 58% of reported content gets removed within 3 days. Good providers hit 90% removal within 24 hours.

Stanford researchers found that user reporting remains the most effective tool against online abuse—and it works fine in encrypted environments. The IWF identified and removed over 255,000 CSAM URLs in 2022 without reading anyone's private messages.

But targeted enforcement doesn't give you infrastructure to monitor all communications. It just protects kids.

Child protection experts consistently oppose mass surveillance, emphasizing that encryption and privacy are essential for children's safety, not threats to it. UNICEF notes that secure communications enable children to "learn, share, and participate in civic life" safely, while child abuse survivors emphasize they need confidential communications to seek help.

The Political Reality Check

Let's be honest about Fight Chat Control—sign it, absolutely. But understand what it is: a pressure valve. It channels opposition into a petition that makes us feel heard while the vote counts stay the same. Real power doesn't respond to online signatures; it responds to political cost. Yes, I'm telling you to sign a petition I just called a pressure valve. Because even pressure valves serve a purpose—they document opposition. When this goes wrong later, at least we can't say nobody warned them.

The holdout countries need to hear from their citizens—loudly, repeatedly, in ways that make supporting this bill more trouble than it's worth. But these things tend to pass once they get this much institutional momentum behind them.

Where This Actually Leads

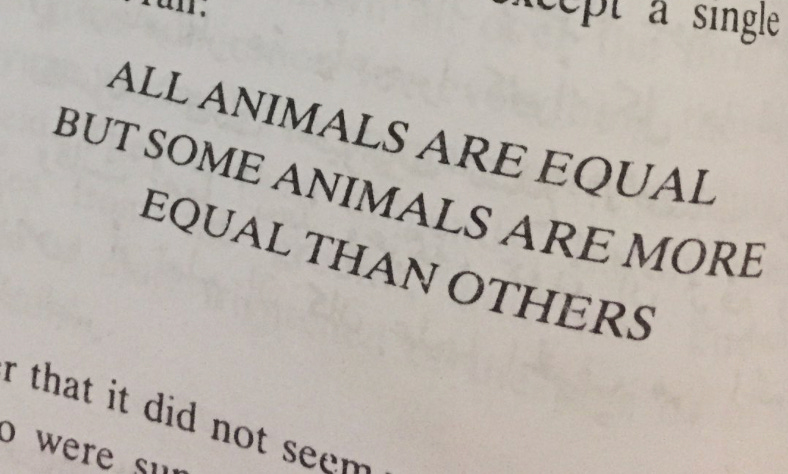

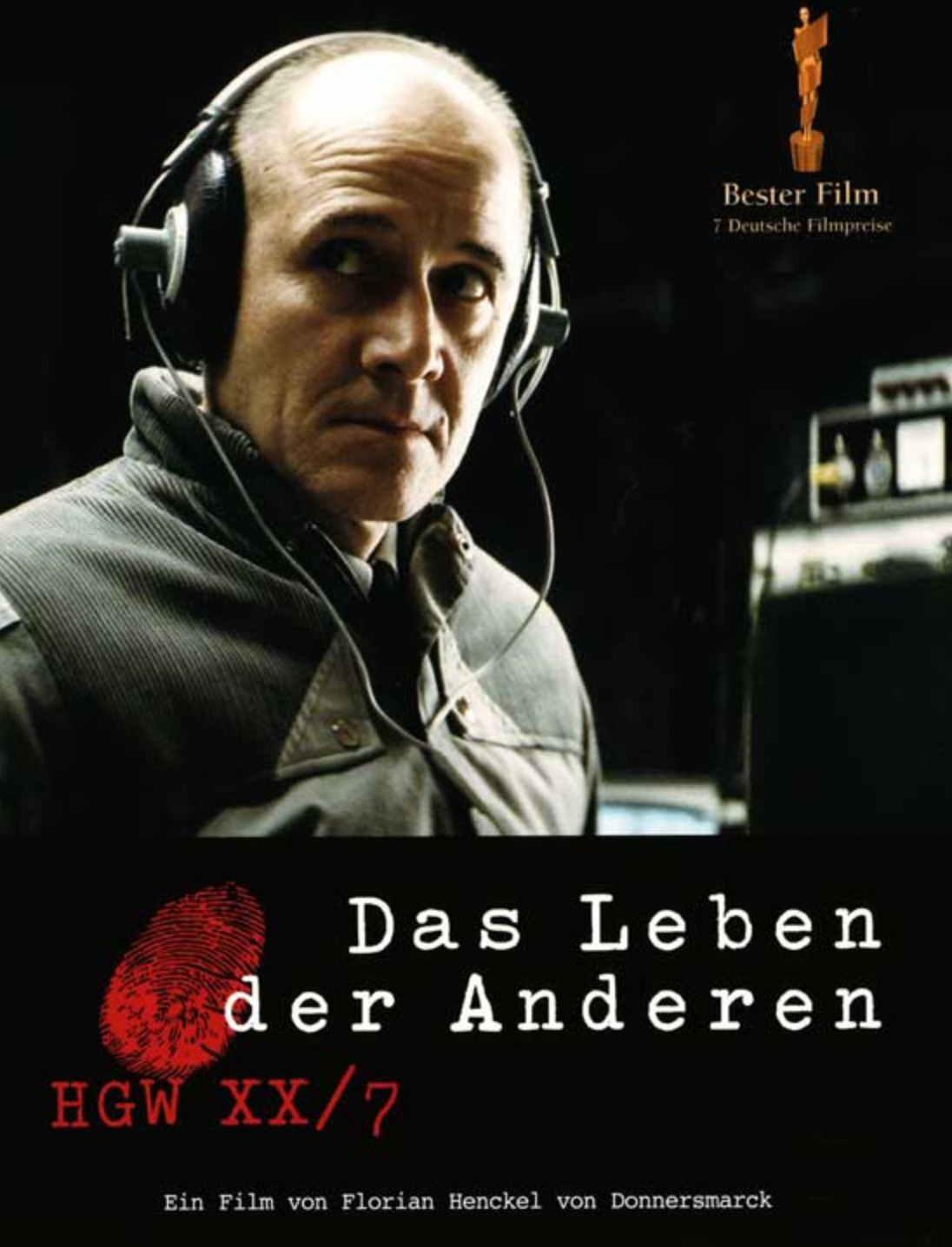

They're building something that would make the Stasi jealous, except now it's automated, scales infinitely, and comes wrapped in good intentions. Every message scanned, every image analyzed, every communication filtered through systems that will inevitably expand beyond their original scope. Because that's what always happens. Are we insured in anyway from it’s misuse by any future government to come?

The infrastructure they're building today becomes tomorrow's tool for whatever they want to monitor. Economic dissent during the next crisis. Organizing during the next protest. Whatever threatens whoever's in charge. The technical capability, once built, doesn't go away.

So Here We Are

Maybe I'm wrong. Maybe this time will be different. Maybe they'll only use it for protecting children, never expand its scope, never abuse the capability, never have a data breach, never elect someone who sees this infrastructure as an opportunity.

But I spent hours cross-referencing legal opinions, and checking those statistics because something felt off. And the more I dig, the more it looks like they're betting we'll get tired of fighting this. That we'll accept it because, well, what choice do we have?

With 15 countries supporting, 3 opposing, and 9 undecided, they're probably right. Even if every single undecided country flips to opposition, that's still 15-12 in favor. This will likely pass. Your private communications will be scanned. The infrastructure will be built. And in a few years, we'll wonder how we let it happen, even though we saw it coming.

At least now you know what's happening. Do with that what you will.

If you want to try anyway: fightchatcontrol.eu has the petition. Your representatives need to hear from you. The holdout countries need pressure. Maybe this time enough people will care. I doubt it, but maybe.